The challenges of data contextualization for vision AI

Scaling artificial intelligence for the enterprise: The challenge of data contextualization and how to solve it

As your company deploys artificial intelligence (AI) projects, you will likely face unexpected technical challenges that will slow your delivery of business benefits. One big challenge is data contextualization, and only a few technology providers can help you overcome it.

This article helps you understand contextualization for vision AI projects. The goal is to help you avoid the obstacles contextualization may present when you try to scale your project.

What is the real challenge?

When companies get started with AI, many think the hard part is to develop models for machine learning (ML). That’s where they focus most of their attention and budget.

Newcomers to AI may not understand that ML is the “easy” part of making it all work. Many organizations can do ML well, and ML frameworks are well documented and widely available.

Managing large amounts of data and operationalizing AI is much harder than mastering ML.

The real challenge is to make your data useful through proper contextualization. That’s the process by which you prepare your data for the variety of uses to which you will put it.

When you contextualize, you process the data into consistent formats and nomenclature. Then you associate it to a data model. Finally, you make the data accessible in such a way that various departments or business units can process it efficiently and use it for their own purposes.

For the huge volume of data involved in fast-growing vision AI projects, it’s often hard for companies to contextualize their own data. They’re likely to need help.

What is data contextualization?

Let’s look at a simple example of contextualization to illustrate the principle.

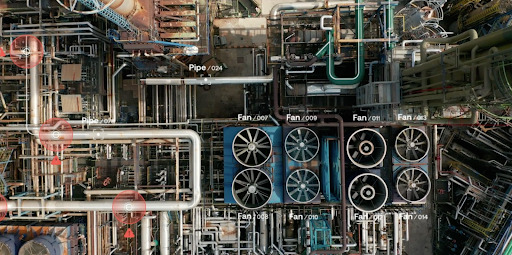

The AI lab of an oil and gas company has built a computer vision model to detect corrosion on pipes. With that model, the team can detect corrosion on pipes across various environments.

Let’s suppose the AI model can identify both the corrosion and the kind of asset on which it has occurred.

As helpful as that information is, maintenance engineers would find it useful to have more context.

On what kind of pipe has the corrosion occurred? Where is the pipe located? How fast does the pipe’s condition change, and when was that section of pipe initially scheduled for routine maintenance?

The process of contextualization helps establish meaning—or context—across various data elements that correspond to an event.

Here’s an example. The camera that captured the image of pipe corrosion also added metadata to the image. The metadata includes the camera’s location, orientation, and position when it took the picture.

The oil and gas company can match that information in the metadata with the design file or geographic information system (GIS) file of the infrastructure where the pipe is located. The company can then deduce the location of the corrosion and assess how fast the severity of corrosion has changed. The company can also determine that the image represents a specific section of pipe within the infrastructure, and it can get the pipe’s part number.

With the part number identified, the company can look up the maintenance schedule for that section of pipe. If routine maintenance is scheduled too far in the future, a maintenance system can reschedule the section for earlier attention.

What makes contextualization complex?

The contextualization process is relatively simple for structured data. Enterprise resource planning (ERP) systems solved the problem years ago. Contextualization in ERP systems is not complicated because the data is organized into standardized formats and related processes are mostly transactional.

With ERP, one central database collects data from all areas of operations, and the data is made available to all business functions and operating units that share the same ERP instance.

How is contextualization different for vision-based AI projects?

Data used for vision AI applications may be unstructured.

Unlike most of the data in an ERP system, data used for vision AI may not consist of alphanumeric characters neatly arranged in tabular format. Instead, the data for vision AI may be encoded in the form of pixels, rasters, point clouds, and many other formats represented below.

How do you unlock the important elements of this information and format it so that it’s useful across applications? How do you share the data efficiently, in ways that make it readily available when needed—without additional processing?

Solving the problem: The need for a unified data model

The first step in building data-driven applications is to develop a unified data model that integrates and correlates data from enterprise and operational data stores.

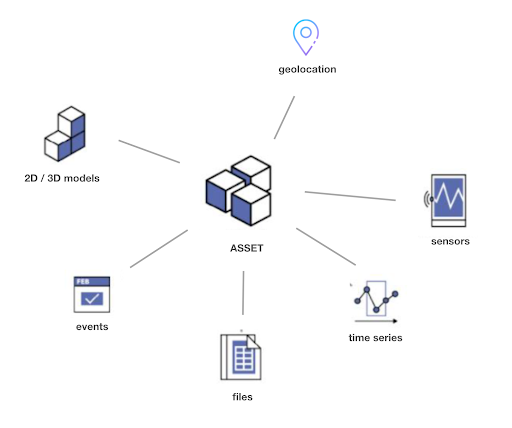

Asset-intensive operators—including companies in power, petrochemicals, and oil and gas—need a unified data model that defines a hierarchy of assets. The hierarchy must model relationships among equipment, IoT sensors (or tags) that monitor the equipment, and any visual data related to the equipment or sensors.

- First, data scientists must correlate data from different sources:

- Historical data from the equipment

- Data collected from sensors

Each data element may be linked to one or more assets.

Then data scientists develop AI models.

With reliable AI models in hand, companies can use the models to improve the performance of assets or to predict their failure.

One possible approach: network data models

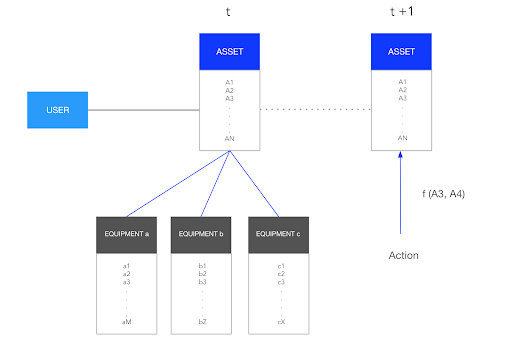

A network data model is a mathematical and digital structure used to represent physical assets, their components, and the relationships among them. Such data models also include functions such as events or actions that modify one or several attributes and thus the asset’s state.

The data may include the identity and attributes of the physical asset, the effects of a specific event or action, and its geolocation.

These data models can provide a good physical representation of the asset and its evolution over time. Newly collected data updates the attributes of the asset, giving an accurate picture at a specific moment. The new data also enables prediction of potential future states depending on a specific set of actions or events.

The use of network data models is common in industrial implementations. The models are associated with the use of spatial and time series analytics.

What are the options to implement contextualization?

For organizations undertaking AI initiatives, only two methods are available for contextualizing data. You can:

- Contextualize it manually

- Use an industrial platform to automate contextualization through an efficient data model

Unfortunately, manual contextualization is not scalable. You can do it only for simple pilot projects and point-to-point applications that produce one clearly defined output for one clearly defined input.

Why it pays to use software from a third-party provider

Alteia is among the few software providers that contextualize data for AI applications.

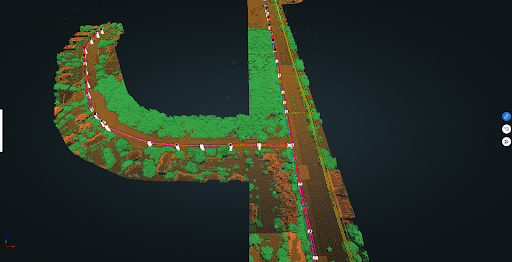

For example, we work with electrical utilities that contextualize their GIS network with their LiDAR data. The goal is to navigate into their 3D point clouds so they can analyze vegetation encroachment and take action to control it.

- We ingest the utilities’ point clouds into the Alteia platform. Each point in a cloud corresponds to unique geolocation data.

- We also upload data from the companies’ GIS.

- We verify the quality and projection of the point clouds.

- We contextualize the data. This enables us to pull the right visual data almost instantly from a specific GIS section. When a system user selects a section of the GIS, they can automatically see its details in 3D. They can do this within seconds on an internet-equipped laptop or mobile device with no upgraded processing power.

In the Alteia platform, geolocation enables us to contextualize all the data elements we ingest.

In theory, you could do all four of the preceding steps manually. But for data collection in a much broader area, you would have to link every section of your GIS to a point cloud tile.

A point cloud often involves thousands of tiles, especially when it covers the distribution network for an entire country. To contextualize data on such a large scale would require thousands of manual tasks. And you would have to repeat them each time you update your data. So you must automate the process.

Alteia’s vision AI platform executes all contextualization processes automatically by using artificial intelligence.

We find common attributes among your datasets; then we contextualize your data to make it useful across your company.

Recap and summary

Apart from data scientists and IT professionals, few people in business are likely to understand data contextualization or its importance to your business.

Even data scientists and IT professionals may not fully understand the challenges of data contextualization for large-scale vision AI projects.

Because vision AI is relatively new, most IT professionals lack prior experience rolling out big AI implementations. So even the best of them may overlook the need to think about how they will contextualize massive amounts of disparate data for growing AI projects.

Progress in rolling out a project can stall until project managers find their way around obstacles they didn’t foresee.

Companies now have an easy, fast, and cost-effective way to manage large-scale contextualization. In addition, they can work with one of the handful of companies that provide contextualization as part of an AI platform as a service (PaaS) offering.

Such companies have helped deploy and scale enterprise AI systems many times. With their help, you can avoid many of the surprises and disappointments you’re likely to face if you try to do everything yourself.

To learn more about how Alteia can help you overcome the challenges of contextualization—and also to avoid other surprises in scaling enterprise AI— ask for a conversation with an Alteia expert.